Anyway, the news reminded me of the great reply sent by Dr. Edgar

Villanueva, congressman in Peru at the time, to Microsoft as a reply

to Microsofts attack on his proposal regarding the use of free software

in the public sector in Peru. As the text was not available from a

few of the URLs where it used to be available, I copy it here from

my

source to ensure it is available also in the future. Some

background information about that story is available in

an article from

Linux Journal in 2002.

Lima, 8th of April, 2002

To: Señor JUAN ALBERTO GONZÁLEZ

General Manager of Microsoft Perú

Dear Sir:

First of all, I thank you for your letter of March 25, 2002 in which you state the official position of Microsoft relative to Bill Number 1609, Free Software in Public Administration, which is indubitably inspired by the desire for Peru to find a suitable place in the global technological context. In the same spirit, and convinced that we will find the best solutions through an exchange of clear and open ideas, I will take this opportunity to reply to the commentaries included in your letter.

While acknowledging that opinions such as yours constitute a significant contribution, it would have been even more worthwhile for me if, rather than formulating objections of a general nature (which we will analyze in detail later) you had gathered solid arguments for the advantages that proprietary software could bring to the Peruvian State, and to its citizens in general, since this would have allowed a more enlightening exchange in respect of each of our positions.

With the aim of creating an orderly debate, we will assume that what you call "open source software" is what the Bill defines as "free software", since there exists software for which the source code is distributed together with the program, but which does not fall within the definition established by the Bill; and that what you call "commercial software" is what the Bill defines as "proprietary" or "unfree", given that there exists free software which is sold in the market for a price like any other good or service.

It is also necessary to make it clear that the aim of the Bill we are discussing is not directly related to the amount of direct savings that can by made by using free software in state institutions. That is in any case a marginal aggregate value, but in no way is it the chief focus of the Bill. The basic principles which inspire the Bill are linked to the basic guarantees of a state of law, such as:

- Free access to public information by the citizen.

- Permanence of public data.

- Security of the State and citizens.

To guarantee the free access of citizens to public information, it is indispensable that the encoding of data is not tied to a single provider. The use of standard and open formats gives a guarantee of this free access, if necessary through the creation of compatible free software.

To guarantee the permanence of public data, it is necessary that the usability and maintenance of the software does not depend on the goodwill of the suppliers, or on the monopoly conditions imposed by them. For this reason the State needs systems the development of which can be guaranteed due to the availability of the source code.

To guarantee national security or the security of the State, it is indispensable to be able to rely on systems without elements which allow control from a distance or the undesired transmission of information to third parties. Systems with source code freely accessible to the public are required to allow their inspection by the State itself, by the citizens, and by a large number of independent experts throughout the world. Our proposal brings further security, since the knowledge of the source code will eliminate the growing number of programs with *spy code*.

In the same way, our proposal strengthens the security of the citizens, both in their role as legitimate owners of information managed by the state, and in their role as consumers. In this second case, by allowing the growth of a widespread availability of free software not containing *spy code* able to put at risk privacy and individual freedoms.

In this sense, the Bill is limited to establishing the conditions under which the state bodies will obtain software in the future, that is, in a way compatible with these basic principles.

From reading the Bill it will be clear that once passed:

the law does not forbid the production of proprietary software

the law does not forbid the sale of proprietary software

the law does not specify which concrete software to use

the law does not dictate the supplier from whom software will be bought

the law does not limit the terms under which a software product can be licensed.

What the Bill does express clearly, is that, for software to be acceptable for the state it is not enough that it is technically capable of fulfilling a task, but that further the contractual conditions must satisfy a series of requirements regarding the license, without which the State cannot guarantee the citizen adequate processing of his data, watching over its integrity, confidentiality, and accessibility throughout time, as these are very critical aspects for its normal functioning.

We agree, Mr. Gonzalez, that information and communication technology have a significant impact on the quality of life of the citizens (whether it be positive or negative). We surely also agree that the basic values I have pointed out above are fundamental in a democratic state like Peru. So we are very interested to know of any other way of guaranteeing these principles, other than through the use of free software in the terms defined by the Bill.

As for the observations you have made, we will now go on to analyze them in detail:

Firstly, you point out that: "1. The bill makes it compulsory for all public bodies to use only free software, that is to say open source software, which breaches the principles of equality before the law, that of non-discrimination and the right of free private enterprise, freedom of industry and of contract, protected by the constitution."

This understanding is in error. The Bill in no way affects the rights you list; it limits itself entirely to establishing conditions for the use of software on the part of state institutions, without in any way meddling in private sector transactions. It is a well established principle that the State does not enjoy the wide spectrum of contractual freedom of the private sector, as it is limited in its actions precisely by the requirement for transparency of public acts; and in this sense, the preservation of the greater common interest must prevail when legislating on the matter.

The Bill protects equality under the law, since no natural or legal person is excluded from the right of offering these goods to the State under the conditions defined in the Bill and without more limitations than those established by the Law of State Contracts and Purchasing (T.U.O. by Supreme Decree No. 012-2001-PCM).

The Bill does not introduce any discrimination whatever, since it only establishes *how* the goods have to be provided (which is a state power) and not *who* has to provide them (which would effectively be discriminatory, if restrictions based on national origin, race religion, ideology, sexual preference etc. were imposed). On the contrary, the Bill is decidedly antidiscriminatory. This is so because by defining with no room for doubt the conditions for the provision of software, it prevents state bodies from using software which has a license including discriminatory conditions.

It should be obvious from the preceding two paragraphs that the Bill does not harm free private enterprise, since the latter can always choose under what conditions it will produce software; some of these will be acceptable to the State, and others will not be since they contradict the guarantee of the basic principles listed above. This free initiative is of course compatible with the freedom of industry and freedom of contract (in the limited form in which the State can exercise the latter). Any private subject can produce software under the conditions which the State requires, or can refrain from doing so. Nobody is forced to adopt a model of production, but if they wish to provide software to the State, they must provide the mechanisms which guarantee the basic principles, and which are those described in the Bill.

By way of an example: nothing in the text of the Bill would prevent your company offering the State bodies an office "suite", under the conditions defined in the Bill and setting the price that you consider satisfactory. If you did not, it would not be due to restrictions imposed by the law, but to business decisions relative to the method of commercializing your products, decisions with which the State is not involved.

To continue; you note that:" 2. The bill, by making the use of open source software compulsory, would establish discriminatory and non competitive practices in the contracting and purchasing by public bodies..."

This statement is just a reiteration of the previous one, and so the response can be found above. However, let us concern ourselves for a moment with your comment regarding "non-competitive ... practices."

Of course, in defining any kind of purchase, the buyer sets conditions which relate to the proposed use of the good or service. From the start, this excludes certain manufacturers from the possibility of competing, but does not exclude them "a priori", but rather based on a series of principles determined by the autonomous will of the purchaser, and so the process takes place in conformance with the law. And in the Bill it is established that *no one* is excluded from competing as far as he guarantees the fulfillment of the basic principles.

Furthermore, the Bill *stimulates* competition, since it tends to generate a supply of software with better conditions of usability, and to better existing work, in a model of continuous improvement.

On the other hand, the central aspect of competivity is the chance to provide better choices to the consumer. Now, it is impossible to ignore the fact that marketing does not play a neutral role when the product is offered on the market (since accepting the opposite would lead one to suppose that firms' expenses in marketing lack any sense), and that therefore a significant expense under this heading can influence the decisions of the purchaser. This influence of marketing is in large measure reduced by the bill that we are backing, since the choice within the framework proposed is based on the *technical merits* of the product and not on the effort put into commercialization by the producer; in this sense, competitiveness is increased, since the smallest software producer can compete on equal terms with the most powerful corporations.

It is necessary to stress that there is no position more anti-competitive than that of the big software producers, which frequently abuse their dominant position, since in innumerable cases they propose as a solution to problems raised by users: "update your software to the new version" (at the user's expense, naturally); furthermore, it is common to find arbitrary cessation of technical help for products, which, in the provider's judgment alone, are "old"; and so, to receive any kind of technical assistance, the user finds himself forced to migrate to new versions (with non-trivial costs, especially as changes in hardware platform are often involved). And as the whole infrastructure is based on proprietary data formats, the user stays "trapped" in the need to continue using products from the same supplier, or to make the huge effort to change to another environment (probably also proprietary).

You add: "3. So, by compelling the State to favor a business model based entirely on open source, the bill would only discourage the local and international manufacturing companies, which are the ones which really undertake important expenditures, create a significant number of direct and indirect jobs, as well as contributing to the GNP, as opposed to a model of open source software which tends to have an ever weaker economic impact, since it mainly creates jobs in the service sector."

I do not agree with your statement. Partly because of what you yourself point out in paragraph 6 of your letter, regarding the relative weight of services in the context of software use. This contradiction alone would invalidate your position. The service model, adopted by a large number of companies in the software industry, is much larger in economic terms, and with a tendency to increase, than the licensing of programs.

On the other hand, the private sector of the economy has the widest possible freedom to choose the economic model which best suits its interests, even if this freedom of choice is often obscured subliminally by the disproportionate expenditure on marketing by the producers of proprietary software.

In addition, a reading of your opinion would lead to the conclusion that the State market is crucial and essential for the proprietary software industry, to such a point that the choice made by the State in this bill would completely eliminate the market for these firms. If that is true, we can deduce that the State must be subsidizing the proprietary software industry. In the unlikely event that this were true, the State would have the right to apply the subsidies in the area it considered of greatest social value; it is undeniable, in this improbable hypothesis, that if the State decided to subsidize software, it would have to do so choosing the free over the proprietary, considering its social effect and the rational use of taxpayers money.

In respect of the jobs generated by proprietary software in countries like ours, these mainly concern technical tasks of little aggregate value; at the local level, the technicians who provide support for proprietary software produced by transnational companies do not have the possibility of fixing bugs, not necessarily for lack of technical capability or of talent, but because they do not have access to the source code to fix it. With free software one creates more technically qualified employment and a framework of free competence where success is only tied to the ability to offer good technical support and quality of service, one stimulates the market, and one increases the shared fund of knowledge, opening up alternatives to generate services of greater total value and a higher quality level, to the benefit of all involved: producers, service organizations, and consumers.

It is a common phenomenon in developing countries that local software industries obtain the majority of their takings in the service sector, or in the creation of "ad hoc" software. Therefore, any negative impact that the application of the Bill might have in this sector will be more than compensated by a growth in demand for services (as long as these are carried out to high quality standards). If the transnational software companies decide not to compete under these new rules of the game, it is likely that they will undergo some decrease in takings in terms of payment for licenses; however, considering that these firms continue to allege that much of the software used by the State has been illegally copied, one can see that the impact will not be very serious. Certainly, in any case their fortune will be determined by market laws, changes in which cannot be avoided; many firms traditionally associated with proprietary software have already set out on the road (supported by copious expense) of providing services associated with free software, which shows that the models are not mutually exclusive.

With this bill the State is deciding that it needs to preserve certain fundamental values. And it is deciding this based on its sovereign power, without affecting any of the constitutional guarantees. If these values could be guaranteed without having to choose a particular economic model, the effects of the law would be even more beneficial. In any case, it should be clear that the State does not choose an economic model; if it happens that there only exists one economic model capable of providing software which provides the basic guarantee of these principles, this is because of historical circumstances, not because of an arbitrary choice of a given model.

Your letter continues: "4. The bill imposes the use of open source software without considering the dangers that this can bring from the point of view of security, guarantee, and possible violation of the intellectual property rights of third parties."

Alluding in an abstract way to "the dangers this can bring", without specifically mentioning a single one of these supposed dangers, shows at the least some lack of knowledge of the topic. So, allow me to enlighten you on these points.

On security:

National security has already been mentioned in general terms in the initial discussion of the basic principles of the bill. In more specific terms, relative to the security of the software itself, it is well known that all software (whether proprietary or free) contains errors or "bugs" (in programmers' slang). But it is also well known that the bugs in free software are fewer, and are fixed much more quickly, than in proprietary software. It is not in vain that numerous public bodies responsible for the IT security of state systems in developed countries require the use of free software for the same conditions of security and efficiency.

What is impossible to prove is that proprietary software is more secure than free, without the public and open inspection of the scientific community and users in general. This demonstration is impossible because the model of proprietary software itself prevents this analysis, so that any guarantee of security is based only on promises of good intentions (biased, by any reckoning) made by the producer itself, or its contractors.

It should be remembered that in many cases, the licensing conditions include Non-Disclosure clauses which prevent the user from publicly revealing security flaws found in the licensed proprietary product.

In respect of the guarantee:

As you know perfectly well, or could find out by reading the "End User License Agreement" of the products you license, in the great majority of cases the guarantees are limited to replacement of the storage medium in case of defects, but in no case is compensation given for direct or indirect damages, loss of profits, etc... If as a result of a security bug in one of your products, not fixed in time by yourselves, an attacker managed to compromise crucial State systems, what guarantees, reparations and compensation would your company make in accordance with your licensing conditions? The guarantees of proprietary software, inasmuch as programs are delivered ``AS IS'', that is, in the state in which they are, with no additional responsibility of the provider in respect of function, in no way differ from those normal with free software.

On Intellectual Property:

Questions of intellectual property fall outside the scope of this bill, since they are covered by specific other laws. The model of free software in no way implies ignorance of these laws, and in fact the great majority of free software is covered by copyright. In reality, the inclusion of this question in your observations shows your confusion in respect of the legal framework in which free software is developed. The inclusion of the intellectual property of others in works claimed as one's own is not a practice that has been noted in the free software community; whereas, unfortunately, it has been in the area of proprietary software. As an example, the condemnation by the Commercial Court of Nanterre, France, on 27th September 2001 of Microsoft Corp. to a penalty of 3 million francs in damages and interest, for violation of intellectual property (piracy, to use the unfortunate term that your firm commonly uses in its publicity).

You go on to say that: "The bill uses the concept of open source software incorrectly, since it does not necessarily imply that the software is free or of zero cost, and so arrives at mistaken conclusions regarding State savings, with no cost-benefit analysis to validate its position."

This observation is wrong; in principle, freedom and lack of cost are orthogonal concepts: there is software which is proprietary and charged for (for example, MS Office), software which is proprietary and free of charge (MS Internet Explorer), software which is free and charged for (Red Hat, SuSE etc GNU/Linux distributions), software which is free and not charged for (Apache, Open Office, Mozilla), and even software which can be licensed in a range of combinations (MySQL).

Certainly free software is not necessarily free of charge. And the text of the bill does not state that it has to be so, as you will have noted after reading it. The definitions included in the Bill state clearly *what* should be considered free software, at no point referring to freedom from charges. Although the possibility of savings in payments for proprietary software licenses are mentioned, the foundations of the bill clearly refer to the fundamental guarantees to be preserved and to the stimulus to local technological development. Given that a democratic State must support these principles, it has no other choice than to use software with publicly available source code, and to exchange information only in standard formats.

If the State does not use software with these characteristics, it will be weakening basic republican principles. Luckily, free software also implies lower total costs; however, even given the hypothesis (easily disproved) that it was more expensive than proprietary software, the simple existence of an effective free software tool for a particular IT function would oblige the State to use it; not by command of this Bill, but because of the basic principles we enumerated at the start, and which arise from the very essence of the lawful democratic State.

You continue: "6. It is wrong to think that Open Source Software is free of charge. Research by the Gartner Group (an important investigator of the technological market recognized at world level) has shown that the cost of purchase of software (operating system and applications) is only 8% of the total cost which firms and institutions take on for a rational and truly beneficial use of the technology. The other 92% consists of: installation costs, enabling, support, maintenance, administration, and down-time."

This argument repeats that already given in paragraph 5 and partly contradicts paragraph 3. For the sake of brevity we refer to the comments on those paragraphs. However, allow me to point out that your conclusion is logically false: even if according to Gartner Group the cost of software is on average only 8% of the total cost of use, this does not in any way deny the existence of software which is free of charge, that is, with a licensing cost of zero.

In addition, in this paragraph you correctly point out that the service components and losses due to down-time make up the largest part of the total cost of software use, which, as you will note, contradicts your statement regarding the small value of services suggested in paragraph 3. Now the use of free software contributes significantly to reduce the remaining life-cycle costs. This reduction in the costs of installation, support etc. can be noted in several areas: in the first place, the competitive service model of free software, support and maintenance for which can be freely contracted out to a range of suppliers competing on the grounds of quality and low cost. This is true for installation, enabling, and support, and in large part for maintenance. In the second place, due to the reproductive characteristics of the model, maintenance carried out for an application is easily replicable, without incurring large costs (that is, without paying more than once for the same thing) since modifications, if one wishes, can be incorporated in the common fund of knowledge. Thirdly, the huge costs caused by non-functioning software ("blue screens of death", malicious code such as virus, worms, and trojans, exceptions, general protection faults and other well-known problems) are reduced considerably by using more stable software; and it is well known that one of the most notable virtues of free software is its stability.

You further state that: "7. One of the arguments behind the bill is the supposed freedom from costs of open-source software, compared with the costs of commercial software, without taking into account the fact that there exist types of volume licensing which can be highly advantageous for the State, as has happened in other countries."

I have already pointed out that what is in question is not the cost of the software but the principles of freedom of information, accessibility, and security. These arguments have been covered extensively in the preceding paragraphs to which I would refer you.

On the other hand, there certainly exist types of volume licensing (although unfortunately proprietary software does not satisfy the basic principles). But as you correctly pointed out in the immediately preceding paragraph of your letter, they only manage to reduce the impact of a component which makes up no more than 8% of the total.

You continue: "8. In addition, the alternative adopted by the bill (I) is clearly more expensive, due to the high costs of software migration, and (II) puts at risk compatibility and interoperability of the IT platforms within the State, and between the State and the private sector, given the hundreds of versions of open source software on the market."

Let us analyze your statement in two parts. Your first argument, that migration implies high costs, is in reality an argument in favor of the Bill. Because the more time goes by, the more difficult migration to another technology will become; and at the same time, the security risks associated with proprietary software will continue to increase. In this way, the use of proprietary systems and formats will make the State ever more dependent on specific suppliers. Once a policy of using free software has been established (which certainly, does imply some cost) then on the contrary migration from one system to another becomes very simple, since all data is stored in open formats. On the other hand, migration to an open software context implies no more costs than migration between two different proprietary software contexts, which invalidates your argument completely.

The second argument refers to "problems in interoperability of the IT platforms within the State, and between the State and the private sector" This statement implies a certain lack of knowledge of the way in which free software is built, which does not maximize the dependence of the user on a particular platform, as normally happens in the realm of proprietary software. Even when there are multiple free software distributions, and numerous programs which can be used for the same function, interoperability is guaranteed as much by the use of standard formats, as required by the bill, as by the possibility of creating interoperable software given the availability of the source code.

You then say that: "9. The majority of open source code does not offer adequate levels of service nor the guarantee from recognized manufacturers of high productivity on the part of the users, which has led various public organizations to retract their decision to go with an open source software solution and to use commercial software in its place."

This observation is without foundation. In respect of the guarantee, your argument was rebutted in the response to paragraph 4. In respect of support services, it is possible to use free software without them (just as also happens with proprietary software), but anyone who does need them can obtain support separately, whether from local firms or from international corporations, again just as in the case of proprietary software.

On the other hand, it would contribute greatly to our analysis if you could inform us about free software projects *established* in public bodies which have already been abandoned in favor of proprietary software. We know of a good number of cases where the opposite has taken place, but not know of any where what you describe has taken place.

You continue by observing that: "10. The bill discourages the creativity of the Peruvian software industry, which invoices 40 million US$/year, exports 4 million US$ (10th in ranking among non-traditional exports, more than handicrafts) and is a source of highly qualified employment. With a law that encourages the use of open source, software programmers lose their intellectual property rights and their main source of payment."

It is clear enough that nobody is forced to commercialize their code as free software. The only thing to take into account is that if it is not free software, it cannot be sold to the public sector. This is not in any case the main market for the national software industry. We covered some questions referring to the influence of the Bill on the generation of employment which would be both highly technically qualified and in better conditions for competition above, so it seems unnecessary to insist on this point.

What follows in your statement is incorrect. On the one hand, no author of free software loses his intellectual property rights, unless he expressly wishes to place his work in the public domain. The free software movement has always been very respectful of intellectual property, and has generated widespread public recognition of its authors. Names like those of Richard Stallman, Linus Torvalds, Guido van Rossum, Larry Wall, Miguel de Icaza, Andrew Tridgell, Theo de Raadt, Andrea Arcangeli, Bruce Perens, Darren Reed, Alan Cox, Eric Raymond, and many others, are recognized world-wide for their contributions to the development of software that is used today by millions of people throughout the world. On the other hand, to say that the rewards for authors rights make up the main source of payment of Peruvian programmers is in any case a guess, in particular since there is no proof to this effect, nor a demonstration of how the use of free software by the State would influence these payments.

You go on to say that: "11. Open source software, since it can be distributed without charge, does not allow the generation of income for its developers through exports. In this way, the multiplier effect of the sale of software to other countries is weakened, and so in turn is the growth of the industry, while Government rules ought on the contrary to stimulate local industry."

This statement shows once again complete ignorance of the mechanisms of and market for free software. It tries to claim that the market of sale of non- exclusive rights for use (sale of licenses) is the only possible one for the software industry, when you yourself pointed out several paragraphs above that it is not even the most important one. The incentives that the bill offers for the growth of a supply of better qualified professionals, together with the increase in experience that working on a large scale with free software within the State will bring for Peruvian technicians, will place them in a highly competitive position to offer their services abroad.

You then state that: "12. In the Forum, the use of open source software in education was discussed, without mentioning the complete collapse of this initiative in a country like Mexico, where precisely the State employees who founded the project now state that open source software did not make it possible to offer a learning experience to pupils in the schools, did not take into account the capability at a national level to give adequate support to the platform, and that the software did not and does not allow for the levels of platform integration that now exist in schools."

In fact Mexico has gone into reverse with the Red Escolar (Schools Network) project. This is due precisely to the fact that the driving forces behind the Mexican project used license costs as their main argument, instead of the other reasons specified in our project, which are far more essential. Because of this conceptual mistake, and as a result of the lack of effective support from the SEP (Secretary of State for Public Education), the assumption was made that to implant free software in schools it would be enough to drop their software budget and send them a CD ROM with Gnu/Linux instead. Of course this failed, and it couldn't have been otherwise, just as school laboratories fail when they use proprietary software and have no budget for implementation and maintenance. That's exactly why our bill is not limited to making the use of free software mandatory, but recognizes the need to create a viable migration plan, in which the State undertakes the technical transition in an orderly way in order to then enjoy the advantages of free software.

You end with a rhetorical question: "13. If open source software satisfies all the requirements of State bodies, why do you need a law to adopt it? Shouldn't it be the market which decides freely which products give most benefits or value?"

We agree that in the private sector of the economy, it must be the market that decides which products to use, and no state interference is permissible there. However, in the case of the public sector, the reasoning is not the same: as we have already established, the state archives, handles, and transmits information which does not belong to it, but which is entrusted to it by citizens, who have no alternative under the rule of law. As a counterpart to this legal requirement, the State must take extreme measures to safeguard the integrity, confidentiality, and accessibility of this information. The use of proprietary software raises serious doubts as to whether these requirements can be fulfilled, lacks conclusive evidence in this respect, and so is not suitable for use in the public sector.

The need for a law is based, firstly, on the realization of the fundamental principles listed above in the specific area of software; secondly, on the fact that the State is not an ideal homogeneous entity, but made up of multiple bodies with varying degrees of autonomy in decision making. Given that it is inappropriate to use proprietary software, the fact of establishing these rules in law will prevent the personal discretion of any state employee from putting at risk the information which belongs to citizens. And above all, because it constitutes an up-to-date reaffirmation in relation to the means of management and communication of information used today, it is based on the republican principle of openness to the public.

In conformance with this universally accepted principle, the citizen has the right to know all information held by the State and not covered by well- founded declarations of secrecy based on law. Now, software deals with information and is itself information. Information in a special form, capable of being interpreted by a machine in order to execute actions, but crucial information all the same because the citizen has a legitimate right to know, for example, how his vote is computed or his taxes calculated. And for that he must have free access to the source code and be able to prove to his satisfaction the programs used for electoral computations or calculation of his taxes.

I wish you the greatest respect, and would like to repeat that my office will always be open for you to expound your point of view to whatever level of detail you consider suitable.

Cordially,

DR. EDGAR DAVID VILLANUEVA NUÑEZ

Congressman of the Republic of Perú.

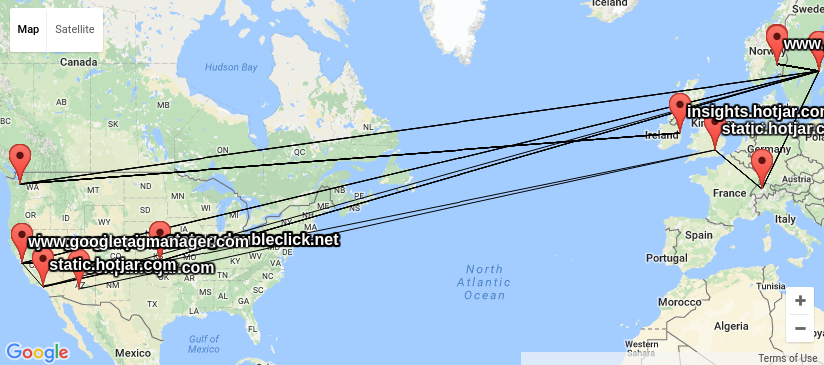

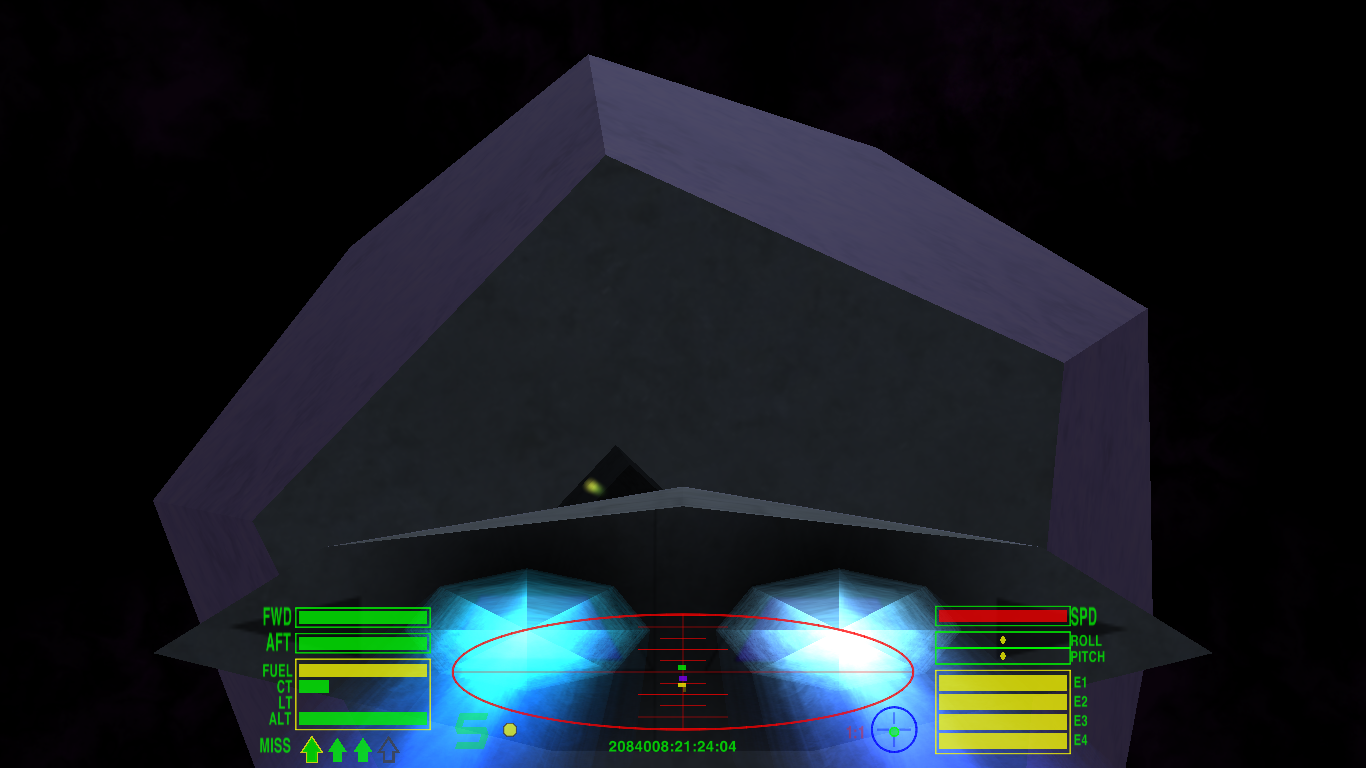

![[photo of subway info screen]](https://people.skolelinux.org/pere/blog/images/2018-03-02-ruter-debian-lenny.jpeg)